GPU allocation suggestion

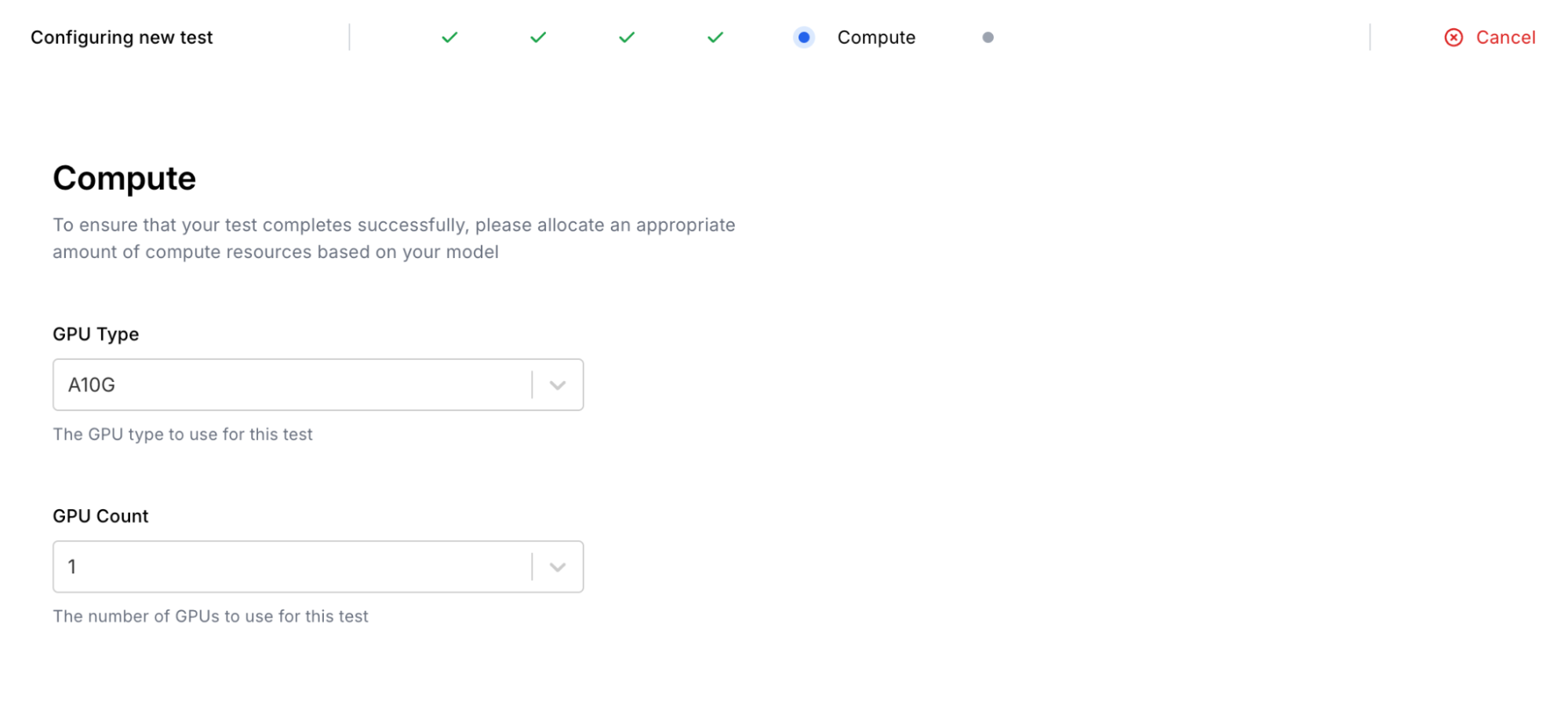

When creating a DynamoEval test from the Dynamo AI SDK or UI, you will have to specify a GPU configuration to run the test. The configuration may vary across models being evaluated, but should be valid for any DynamoEval test type.

Below are Dynamo AI's recommendation for an efficient and successful test:

| Model | Size | Suggested config | Explanation |

|---|---|---|---|

| Remote models (OpenAI, Azure, Custom AI Gateway) | N/A | 1xA10G gpu=GPUConfig(gpu_type=GPUType.A10G, gpu_count=1) | Hosted remotely. A small GPU is used for internal purposes (PII redaction, Toxicity classifier, Similarity scores, ...). |

| Transformers (Llama, …) | < 1.5B | 1xA10G gpu=GPUConfig(gpu_type=GPUType.A10G, gpu_count=1) | Compute is related to the model size being tested |

| Transformers (Llama, …) | ~3B | 2xA10G gpu=GPUConfig(gpu_type=GPUType.A10G, gpu_count=2) | Compute is related to the model size being tested |

| Transformers (Llama, Mistral) | ~7B | 4xA10G gpu=GPUConfig(gpu_type=GPUType.A10G, gpu_count=4) | Compute is related to the model size being tested |

| Transformers (Llama, Mistral) | ~13B | 8xA10G gpu=GPUConfig(gpu_type=GPUType.A10G, gpu_count=8) | Compute is related to the model size being tested |

| Transformers (Llama, Mistral) | >13B+ | Please contact the Dynamo AI team* | Compute is related to the model size being tested |

* In Dynamo AI's trial environment, we limit the size of the models due to GPU requirements. Please reach out to us if you're interested in hosting larger models for your GenAI and Evaluation workflows.